Driver monitoring systems (DMS) based on the AI and Computer Vision (CV) are quickly becoming standard on board of vehicles of any type and model. The safety standards push the sensitivity and alerting adequacy to play critical role when assessing the DMS's applicability to broad adoption. The drivers do not need to face additional distraction coming from misleading alerts as their attention should be laser focused on the driving task. Although there are several apprentice DMS systems on the market yet, the gap still exists but the good news is that it can be filled by the deep learning in virtual reality with Synthetic Data where the AI models are tested and trained prior to the real-world deployment leading to improved adherence to the regulations, better interoperability with ADAS systems, and most importantly thousands of fatalities can be prevented.

Synthetic Data Cloud for real-time in-cabin monitoring system development and validation of vision AI models

The Driver Monitoring System (DMS) will become a standard safety component throughout the world as early as 2023. In the United States the Department of Transportation will compel to begin developing guidelines and rules to combat distracted and intoxicated driving, as well as to modernize the New Car Assessment Program (NCAP). Initially, European regulations will apply to distracted and sleepy driving. Eventually, Europe will need impairment detection systems to cover alcohol and drugs.

There is always time to consider improvements in safety through this technology. According to the National Highway Traffic Safety Administration, distracted driving was responsible for over 3,000 deaths in 2019, a 10% increase over 2018. Drunk driving has also grown. In 2019, 10,142 people were killed in drunk driving accidents. Early projections for 2020 show a 9% rise in DUI fatalities. Many people anticipated road deaths to decrease during the epidemic, but the reverse happened.

To further boost the reliability up to higher Safety Levels it is critical to develop scalable, complementary and interconnected AI models with high adequacy. This can be mostly ensured by training sensors in a virtual environment prior to the real-world deployment. The SKY ENGINE AI platform enables Data Scientist friendly development of such AI models using platform's deep learning in virtual reality technology for computer vision applications. Driver monitoring systems usually employ RGB or infrared (IR) cameras and computer vision software to guarantee that the driver is awake and aware, and is paying attention to the road. The system may be configured to do a sequence of consecutive actions, beginning with a mild alert or warning and advancing to slowing or halting the vehicle if the driver is no longer able to control it.

Key benefits of the DMS/OMS for manufacturers, fleet owners, insurers, drivers and occupants

The key benefits of using the driver and occupants monitoring system include:

- Increased safety of the driver, passengers and others on the road by activities, emotions, attentivness and drowsiness detection

- Alerting driver before collisions happen – avoid collisions than report on after the fact

- Compliance to General Safety Regulations (GSR)

- Improved comfort and experience by driver and occupants identification for settings personalization with i.e. facial recognition

- Supports autonomous driving L3+

- EuroNCAP 4-5 star rating relevance

- Helps drivers improve their skills – especially important for newer drivers

- Track and confirm drivers following the regulations and procedures in fleet safety policy

- Highly decrease operational costs, reduce insurance claims and expenditure, reduce collisions

- Cost-efficient system based on scalable, modular approach and seamless integration with exisiting systems (ADAS)

- High flexibility of adaptation to the universe of models, markets, and vehicle interiors

- Driver's position, weight and pose monitoring (3D skeleton) to facilitate optimization of the safety systems

SKY ENGINE AI approach to the in-cabin monitoring system development

For the human driver, the scene is too complex, reactions too slow and timeframe too short to provide quick and adequate reactions in any conditions. The combination of the DMS with Advanced Driver-Assistance Systems (ADAS) can use predictive analytics to further boost crash-prevention capabilities. ADAS incorporates technology like as automated emergency braking, blind-spot recognition, and lane departure alerts and its capabilities may be further enhanced when integrated with the DMS. For instance, after detecting drowsiness or high distraction level the DMS can send signal to the ADAS to increase the distance to preceding vehicle first before stopping the car completely.

Such DMS can be developed at a fraction of cost of real data acquisition and labelling providing complete solution for driver monitoring and in-cabin sensing. Also, it is viable to employ low-cost cameras in the car as synthetic data and complex vision AI algorithms can be created in the SKY ENGINE AI platform to allow reliable driver monitoring.

The computer vision AI models for the Driver Monitoring System (DMS) that can be developed and effortlessly retrained (when necessary) in the SKY ENGINE AI Synthetic Data Cloud:

- Identification of the driver in order to allow the vehicle to automatically restore its preferences and settings

- Monitor driver fatigue to alert him when potential drowsiness situation is detected

- Monitor driver attentiveness by ensuring he’s keeping his eyes on the road, hand on the wheel and that he/she is aware of any dangerous situation

- Recognize and track any additional objects and elements floating inside the car

- Monitor actions and activities of children, babies, toddlers in a child seat

- Detect and monitor activities/actions by pets

- Pilot a user interface using gestures or gaze by automatically selecting HMI areas, highlight important features on the road, etc.

In addition, training the AI models for the DMS in the SKY ENGINE AI platform preserves the privacy as these models are trained in virtual reality with synthetic data: humans, cars, objects, etc. Virtual vehicle interiors serve the purpose of training, testing and validation of the AI models before deployment in a real car saving hundreds of hours and highly reducing the overall cost of such DMS.

Driver and occupant state (i.e. emotions, drowsiness, distraction)

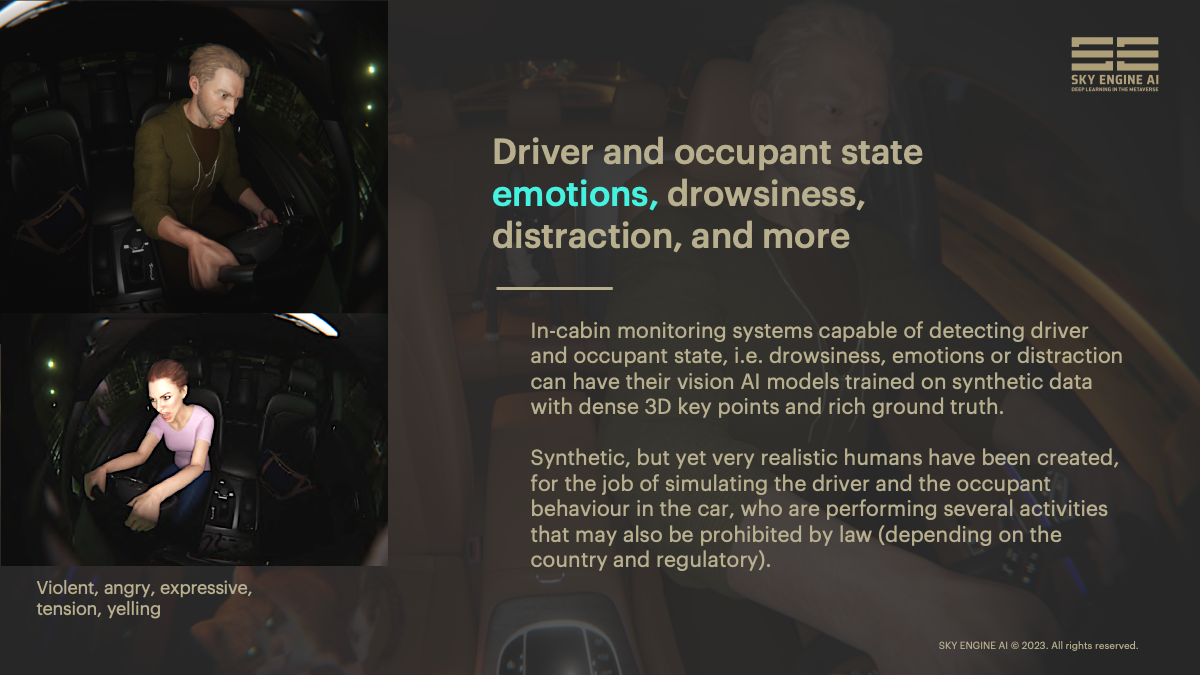

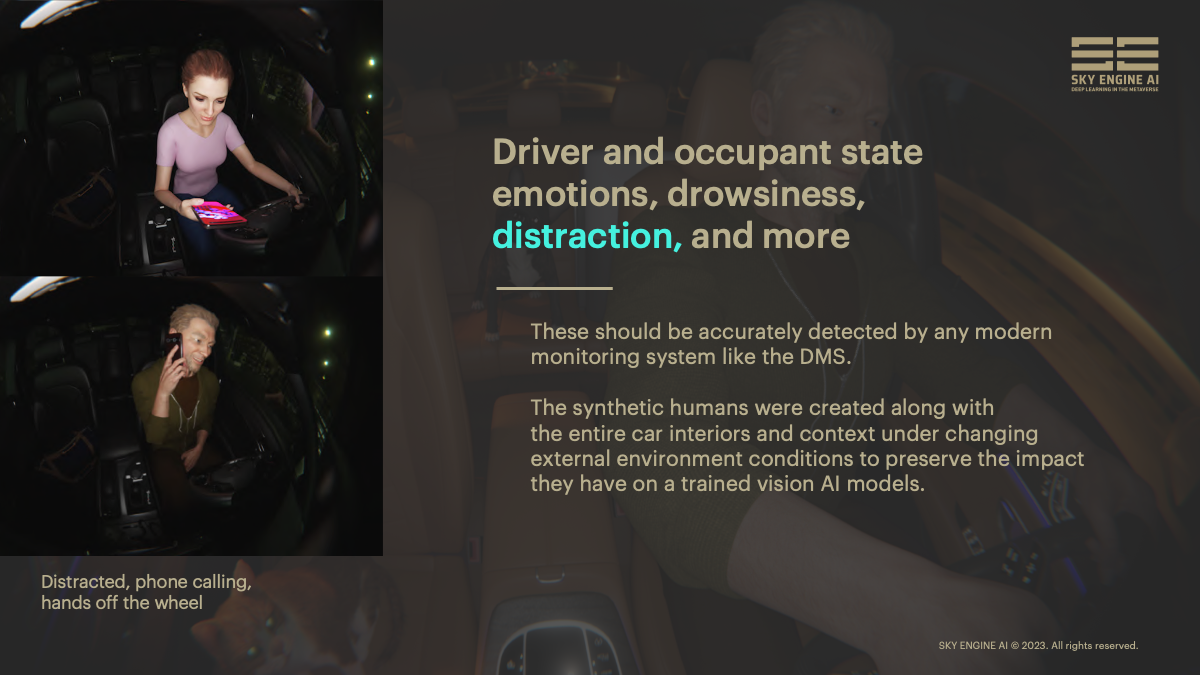

In-cabin monitoring systems capable of detecting driver and occupant state, i.e. drowsiness, emotions or distraction can have their vision AI models trained on synthetic data with dense 3D key points and rich ground truth. Synthetic, but yet very realistic humans have been created, for the job of simulating the driver and the occupant behavior in the car, performing several activities that may also be prohibited by law (depending on the country and regulatory). These should be accurately detected by any modern monitoring system like the DMS. The synthetic humans were created along with the entire car interior and context under changing outside environment conditions to preserve the impact they have on the trained vision AI models.

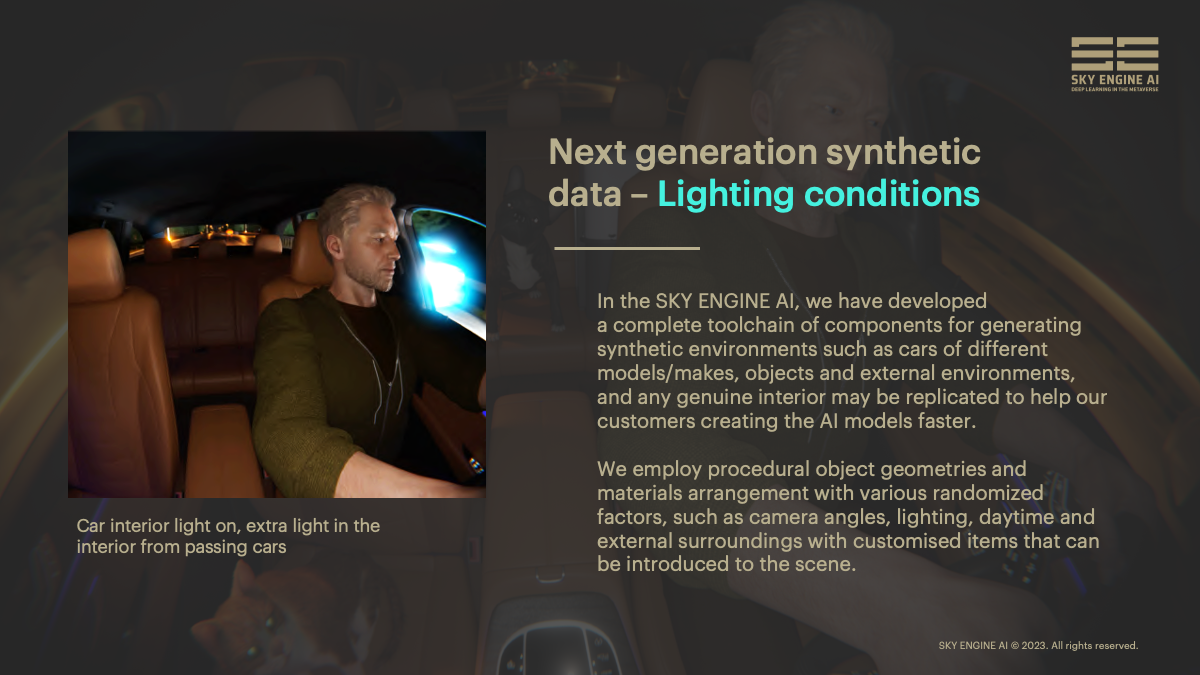

Lighting conditions – internal and external

In the SKY ENGINE AI, we have developed a complete toolchain of components for generating synthetic environments such as cars of different models/makes, objects and external environments, and any genuine interior may be replicated to help our customers creating the AI models faster. We employ procedural object geometries and materials arrangement with various randomized factors, such as camera angles, lighting, daytime and external surroundings, and introduction of customised items into the scene, to obtain highly balanced datasets and to ensure a high degree of diversity in the data itself.

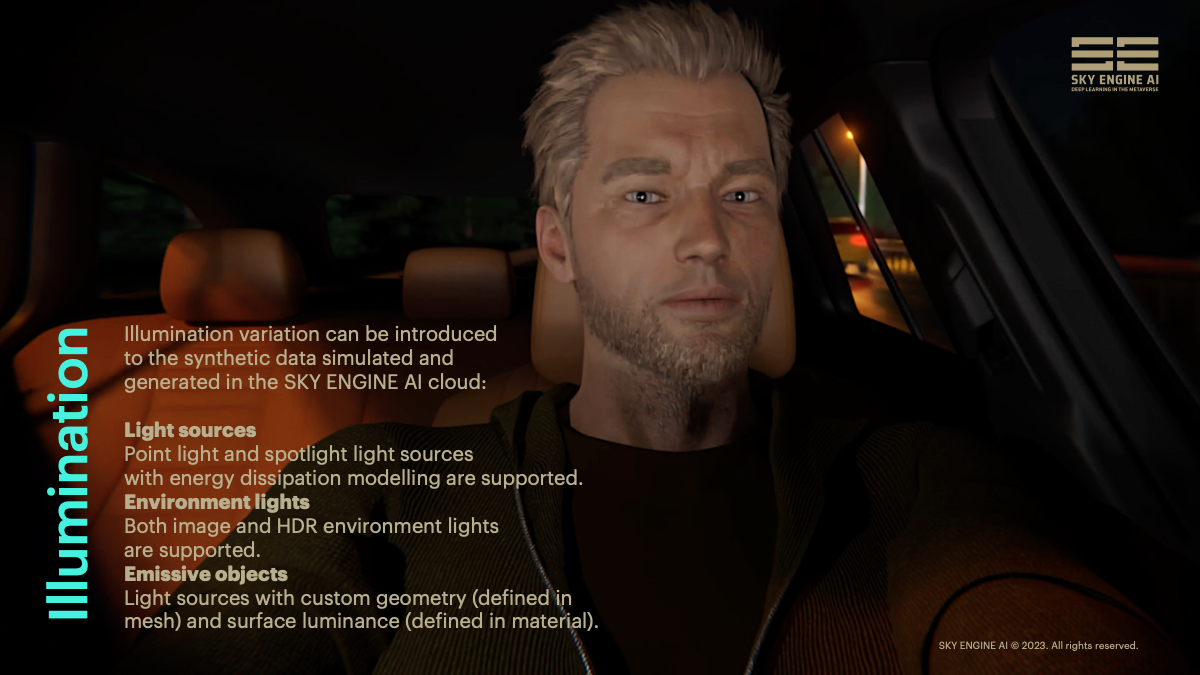

Illumination variation introduced to the synthetic data generated/simulated in the SKY ENGINE AI cloud can also include:

- Light sources

Point light and spotlight light sources with energy dissipation modelling are supported. - Environment lights

Both image and HDR environment lights are supported. - Emissive objects

Light sources with custom geometry (defined in mesh) and surface luminance (defined in material) could be used.

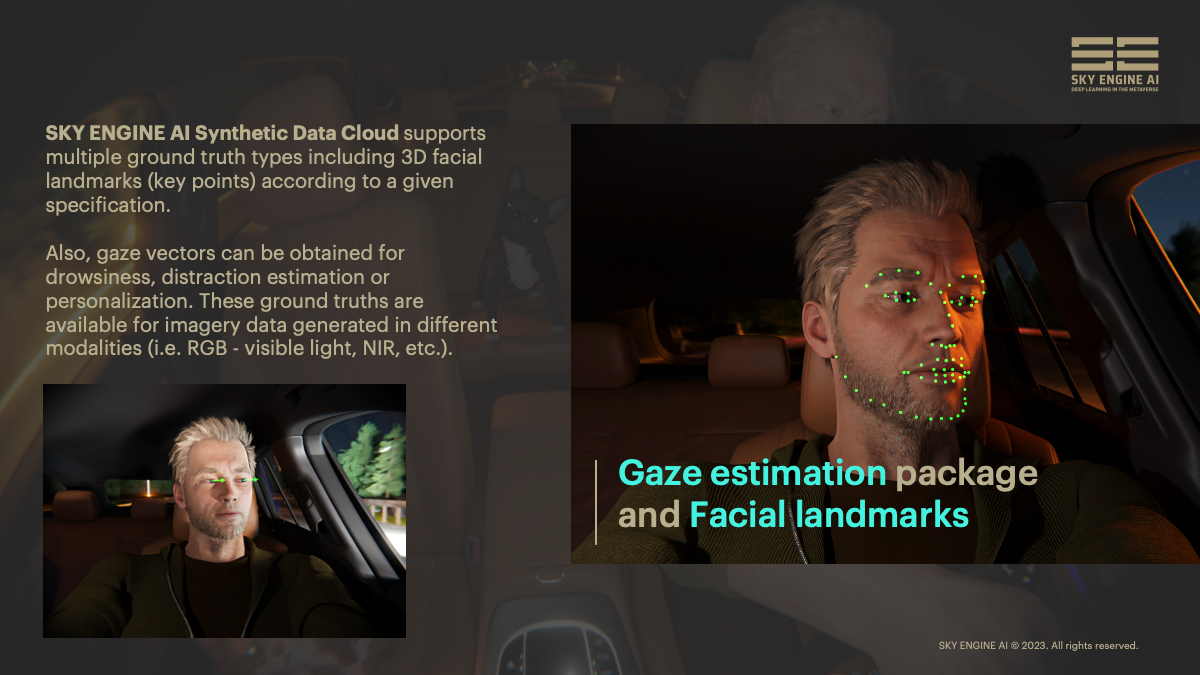

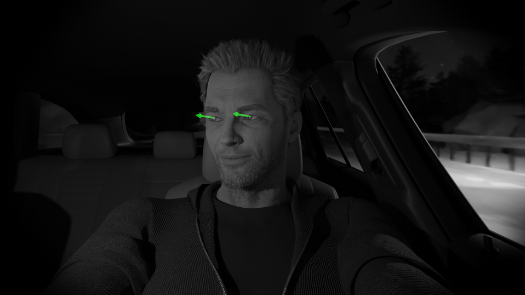

Gaze estimation package and Facial landmarks

SKY ENGINE AI Synthetic Data Cloud supports multiple ground truth types including 3D facial landmarks (key points) according to a given specification. Also, gaze vectors can be obtained for drowsiness, distraction estimation or personalization. These ground truths are available for imagery data generated in different modalities (i.e. RGB, NIR, etc.).

Driver and occupants’ activities simulation for action recognition

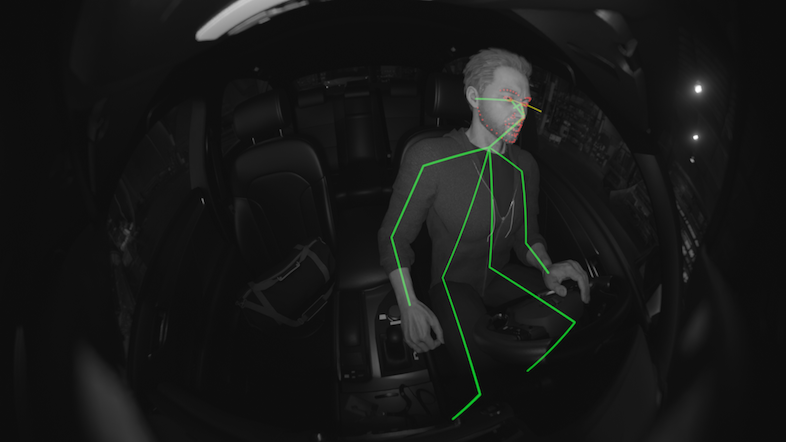

Several, described earlier, synthetic data simulation functionalities are required and the vision AI models tasked to perform detection and recognition of multiple actions taken by the driver and occupants with unprecedented accuracy, including capturing changing situations in the interior of the car, have to be rigorously trained with myriads of samples of well-balanced and diverse data reflecting these activities. Eating different types of food, drinking, smoking (electronic devices, cigarettes, etc.), calling, using phone/laptop, carrying different objects, and many more. Driver and occupant 3D pose estimation setup with 3D skeletons and configured key points model. In addition, there is head pose estimation setup with centroid position and Euler angles of head.

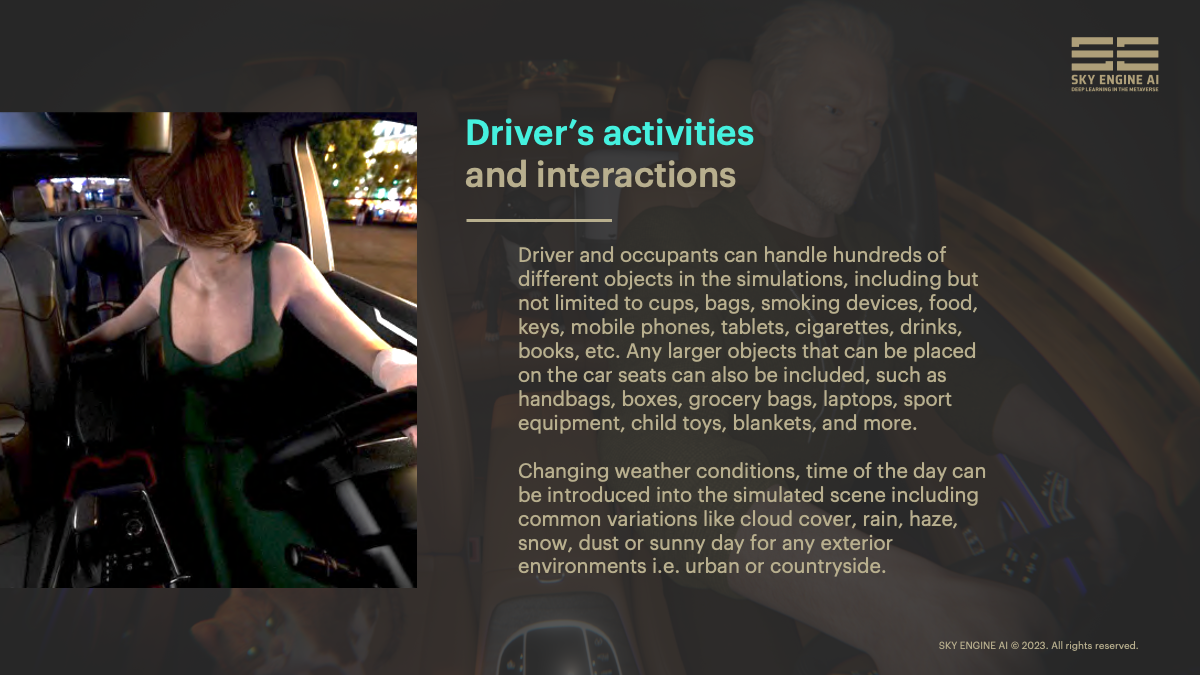

Driver/occupants objects handling. Varying weather conditions, time of the day and exterior environments

Driver and occupants can handle hundreds of different objects in the simulations, including but not limited to cups, bags, smoking devices, food, keys, mobile phones, tablets, cigarettes, drinks, books, etc. Any larger objects that can be placed on the car seats can be included, such as handbags, boxes, grocery bags, laptops, sport equipment, children toys, blankets, and more.

Changing weather conditions, time of the day can be introduced into the simulated scene including common variations like cloud cover, rain, haze, snow, dust or sunny day for any exterior environments i.e. urban or countryside. In addition, light intensity and spill out in the car originating from these weather conditions can also be parametrized and strong sun effects on car cabin, objects, child seats, main seats and occupants are possible.

SKY ENGINE AI Synthetic Data Cloud can generalize to any lighting, background, time of the day or weather conditions reflecting also the impact of that evolving light in the scene on the car’s interior and all the humans, pets and objects present.

Multi-modality support, custom camera/sensor characteristics and pixel-perfect and diverse ground truth

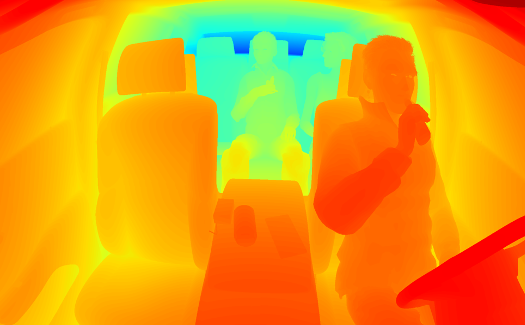

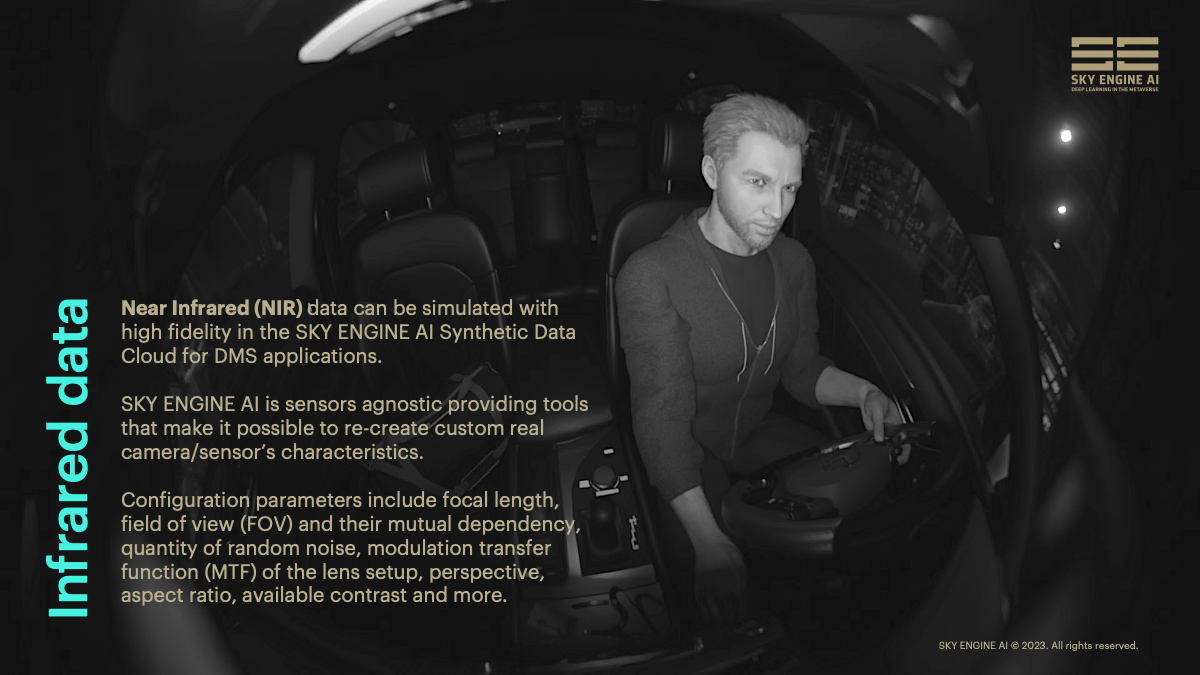

SKY ENGINE AI Synthetic Data Cloud is offering physically-based simulation environment enabling production of data for numerous actions and activities of a driver and the occupants in the in-cabin context. Such datasets can be simulated and generated in different modalities including Visible light (RGB sensors); Near infrared (NIR); Radars; Lidars and more (i.e. X-rays, UWB, etc.). SKY ENGINE AI is sensors agnostic providing tools that make it possible to re-create custom real camera/sensor’s characteristics including focal length, field of view (FOV) and relationship between them, quantity of random noise, modulation transfer function (MTF) of the lens setup, perspective, aspect ratio, available contrast and more.

The platform allows generating pixel-perfect ground truth for several in-cabin objects which can be expressly simulated with depth maps, normal vector maps, instance masks, bounding boxes and 3D key points ready for AI training. Moreover, there are render passes dedicated to deep learning, animation and motion capture systems support and determinism with advanced machinery for randomization strategies of a scene parameters for active learning approach.

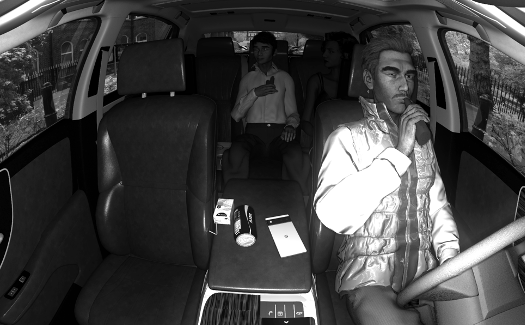

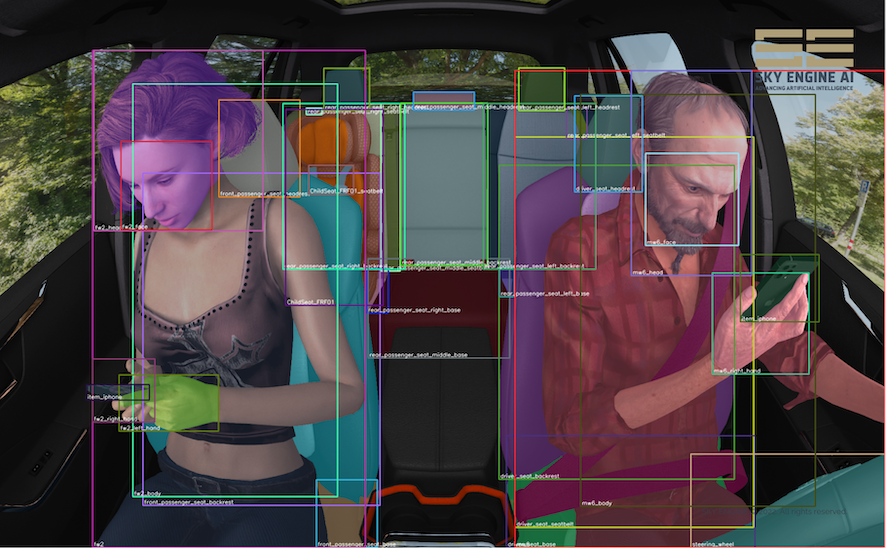

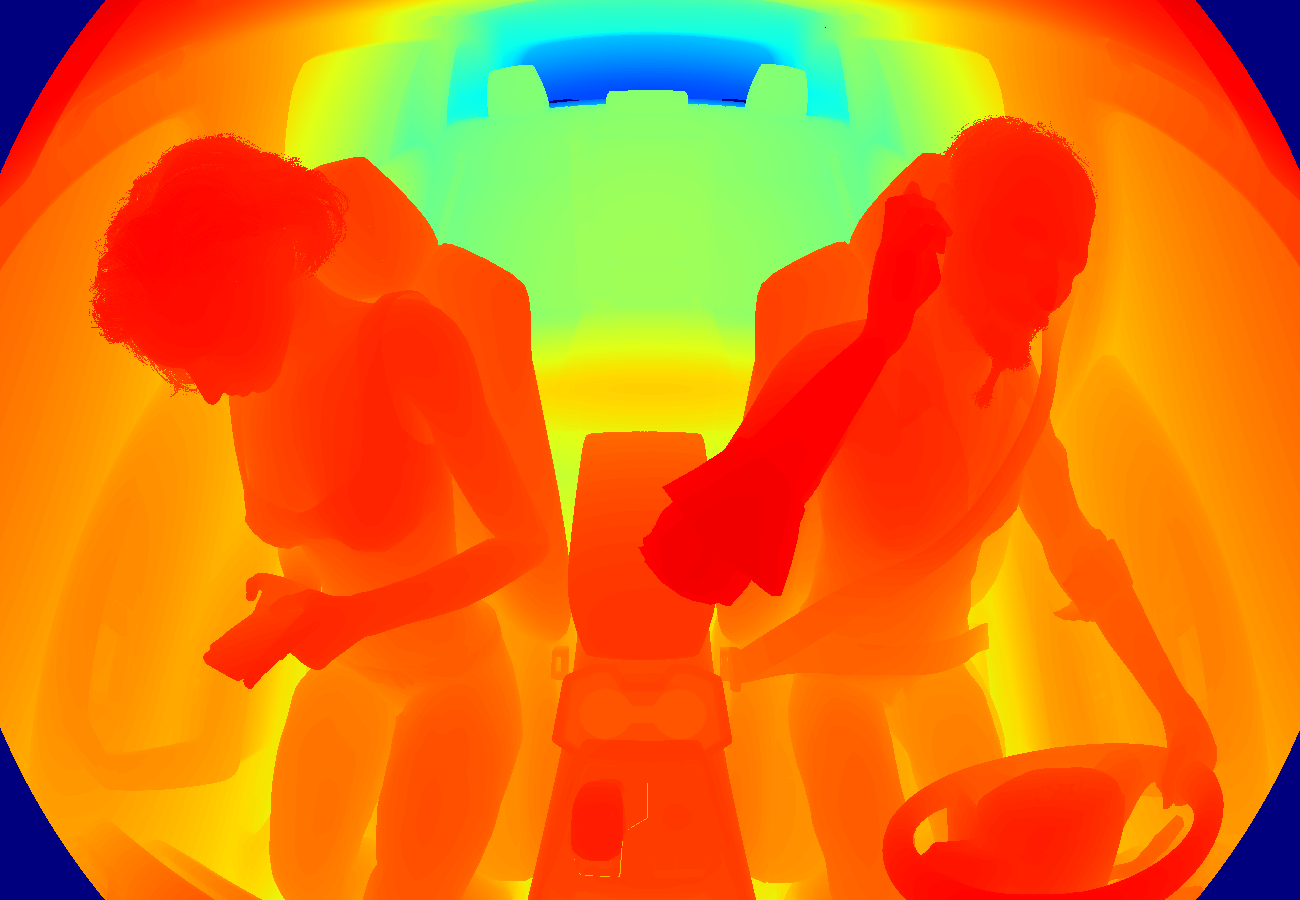

Synthetic images above: Car interior, driver, two occupants on a rear seat, objects and ground truths simulated and generated in the SKY ENGINE AI Synthetic Data Cloud, 6 images: (Left) 3D key points on humans; Depth map; Semantic masks on objects, humans and separately on multiple components of these objects and humans. (Right) Smoking action and objects in the car (NIR); 2D bounding boxes and masks (NIR); Normal vectors map.

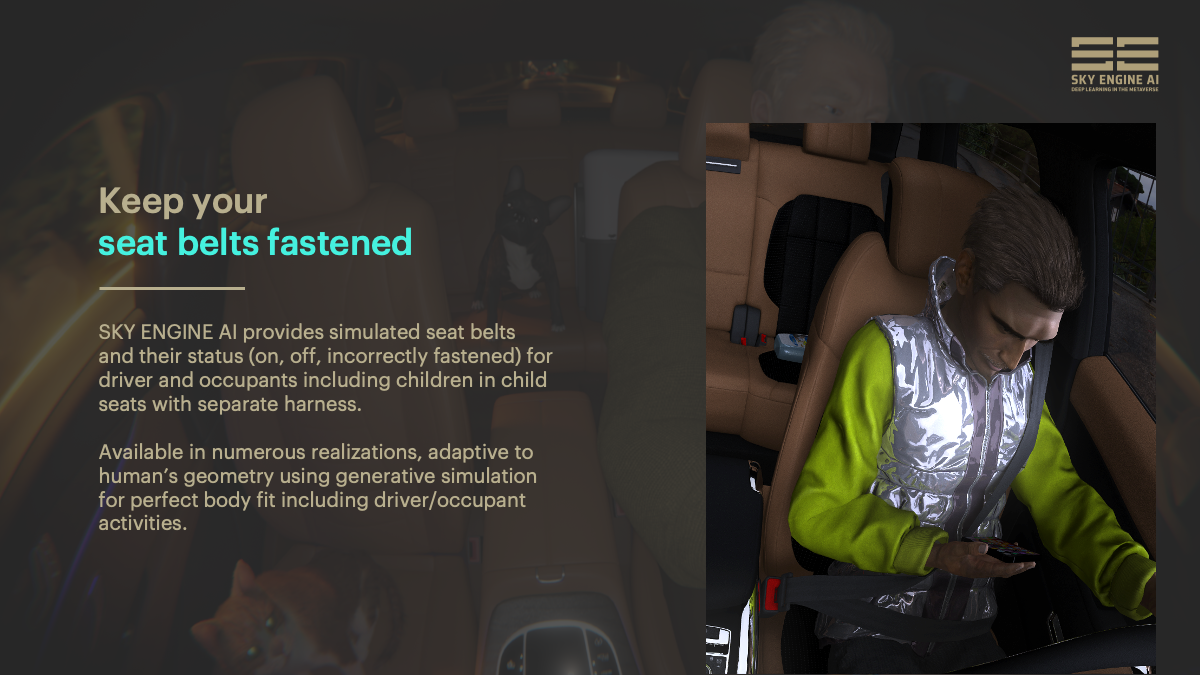

Keep your seat belts fastened

SKY ENGINE AI provides simulated seat belts and their status (on, off, incorrectly fastened) for driver and occupants including children in child seats with separate harness. Available in numerous realizations, adaptive to human’s geometry using generative simulation for perfect body fit including driver/occupant activities.

Gestures recognition

Gestures simulation for i.e. hands on wheel monitoring, indications, pose estimation, objects handling, touching controls and panels, etc. Includes detailed 3D skeleton of hand, palm and fingers. Can serve hand/palm pose estimation models, or spatiotemporal model operating on extracted position of joints.

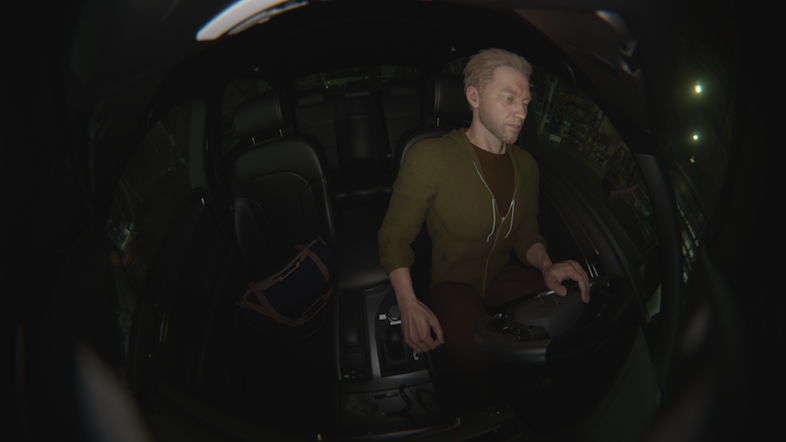

Driver gesture recognition AI models training with synthetic data in the SKY ENGINE AI Synthetic Data Cloud (video). 3D pose estimation (keypoints and skeleton). (Left) Infrared synthetic data, (Right) Visible light data.

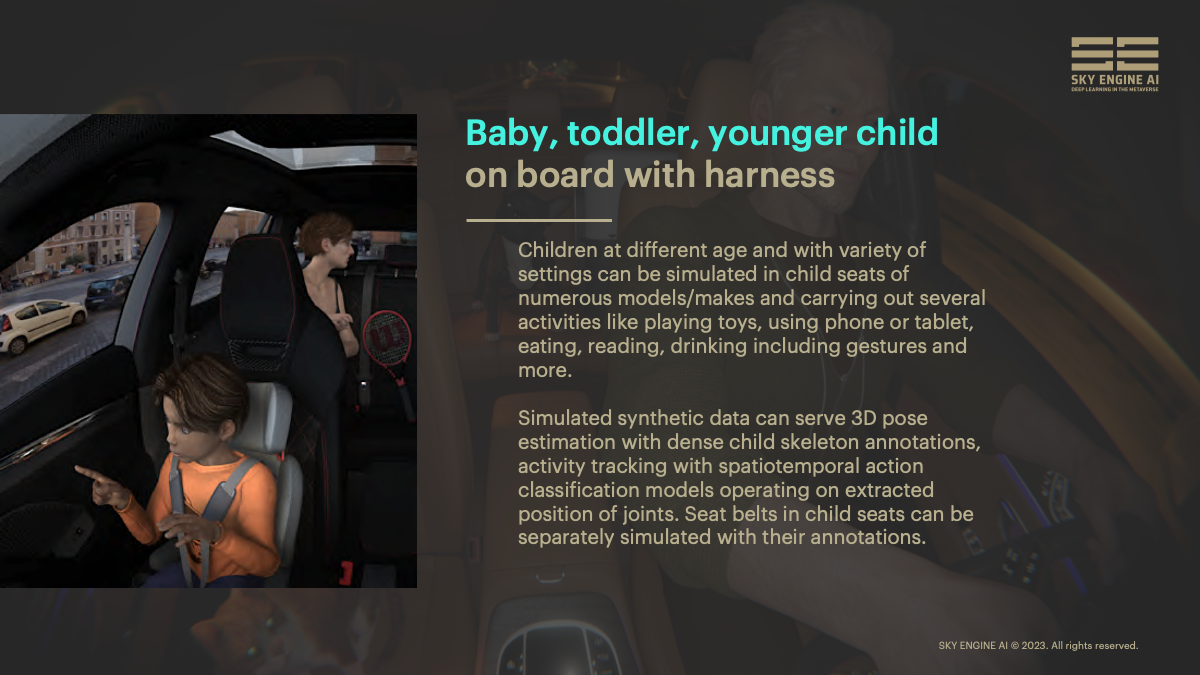

Baby, toddler, younger child on board

Children at different age and with variety of settings can be simulated in child seats of numerous models/makes and carrying out several activities like playing toys, using phone or tablet, eating, reading, drinking including gestures and more. Simulated synthetic data can serve 3D pose estimation with dense child skeleton annotations, activity tracking with spatiotemporal action classification models operating on extracted position of joints. Seat belts in child seats can be separately simulated with their annotations.

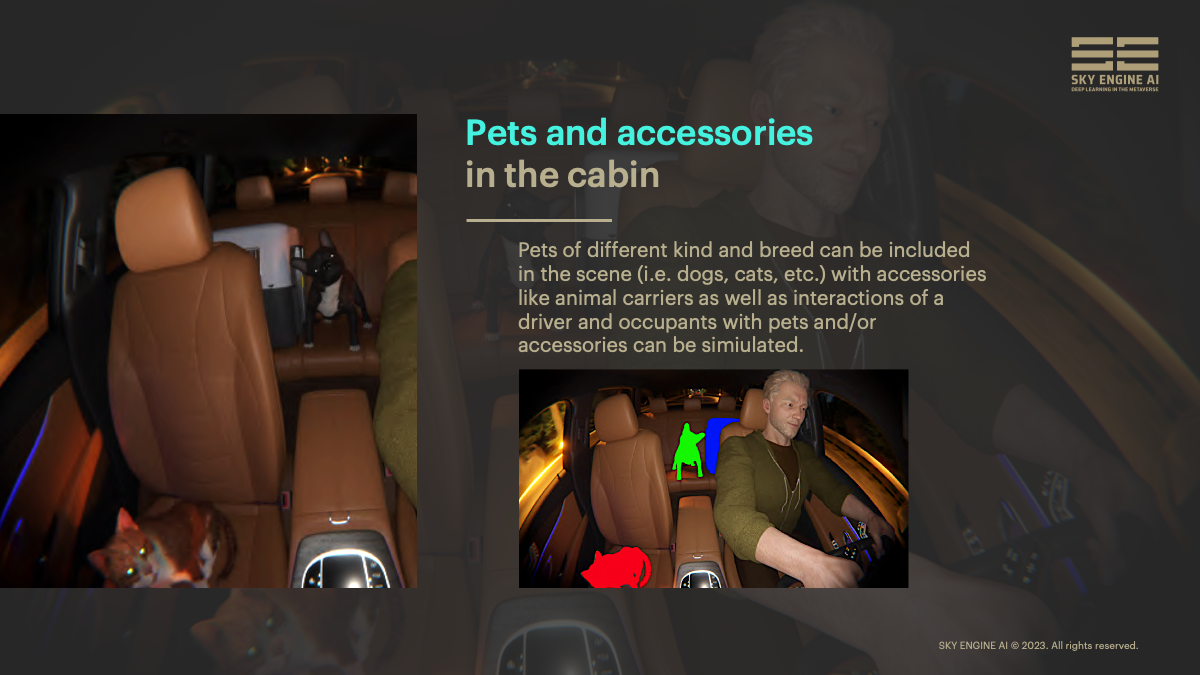

Pets and accessories in the cabin

Pets of different kind and breed can be included in the scene (i.e. dogs, cats, etc.) with accessories i.e. animal carriers. Also, interactions of a driver and occupants with pets and/or accessories can be simulated.

Meet our Synthetic Drivers and Occupants in Context

The SKY ENGINE AI Synthetic Data Cloud enables creation of very realistic humans and objects to propel development of next generation in-cabin monitoring systems. Meet Tina and Mark, who have been created, along with other hundred drivers, for the job of simulating the driver and the occupant behaviour in the car, performing several activities that may also be prohibited by law (depending on the country and regulatory), but should be accurately detected by any modern monitoring system like the DMS. These synthetic humans were created along with the entire car interior and context under changing outside environment conditions to preserve the impact they have on the trained vision AI models.

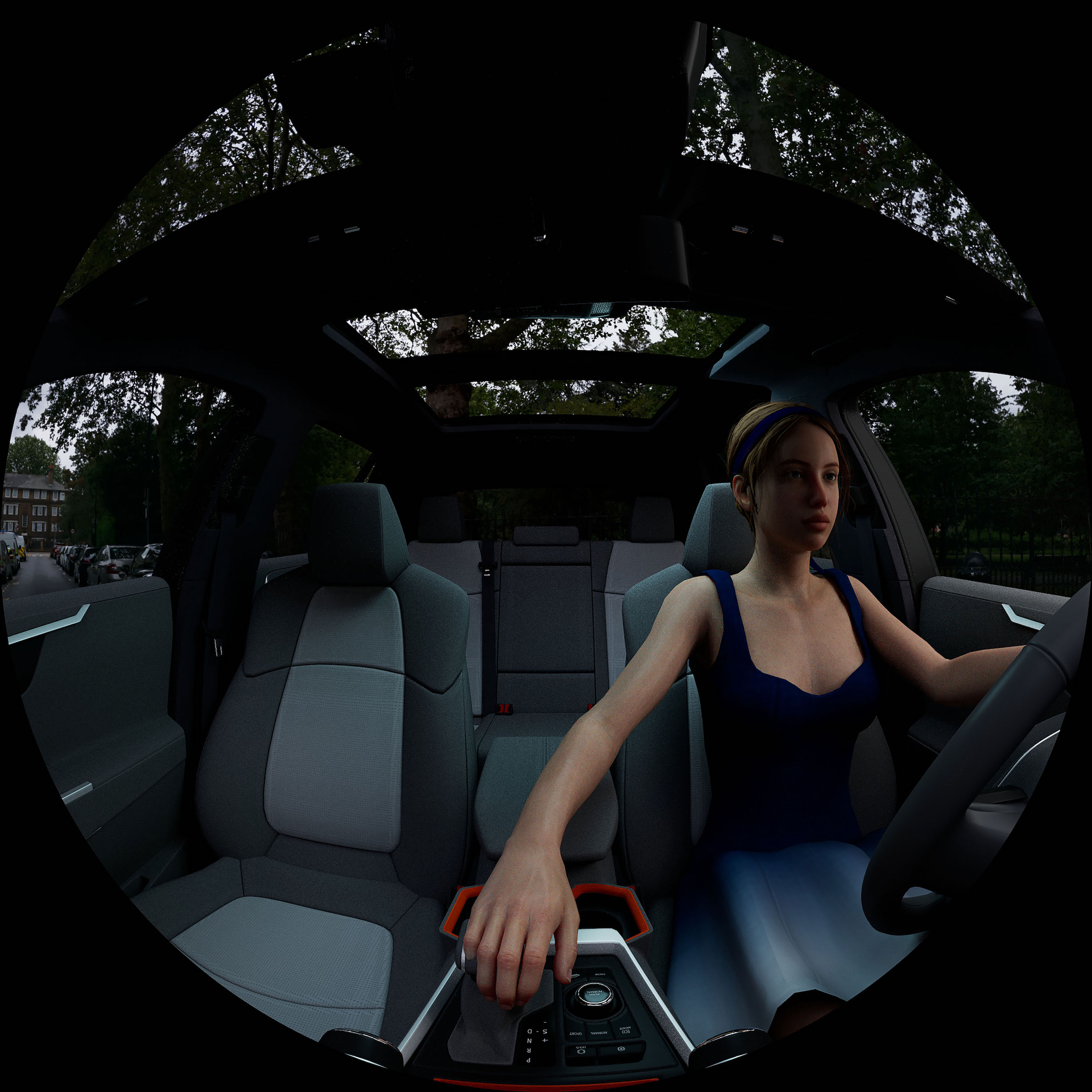

Simulated RGB synthetic data for in-cabin monitoring with highly complex pixel-perfect ground truths generated in the SKY ENGINE AI cloud, 8 images: (Left, top-bottom) Meet Tina – Driver and Occupant; Driver and car interior – camera in the center stack rear view mirror; SKY ENGINE AI complex ground truth system – semantic segmentation masks for separated body parts and 3D key points including invisible face landmarks; Ground truth – depth map; (Right, top-bottom) Meet Mark – Driver; Driver and car interior – camera in the console; Complex ground truth system – 2D bounding boxes; Semantic segmentation masks.

Synthetic data of car interior, driver and objects (i.e. bag, keys, mobile phone, etc.) and ground truths simulated and generated in the SKY ENGINE AI platform, 6 images: (Left) Drowsy male driver (RGB), Drowsy male driver (IR), Semantic segmentation, (Right) Keypoints and gaze estimation, Distracted female driver, Depth map.

Driver-monitoring systems capable of detecting drowsiness or distraction are only the beginning. As these technologies develop, they will become a part of a larger interior sensing platform that offers personalization, improved safety, infotainment, and even communication with smart home systems. The DMS can recognize the driver and enable personalisation to change the seat, temperature, side mirrors, and so on to the driver's preferences. The technologies will be able to determine if the driver is intoxicated or experiencing a medical emergency. Drivers will be able to operate some functions in the car by using their eyes or gestures and the camera can watch both the driver and the cabin, allowing it to recognize whether a kid has been left in a car seat, assess whether an essential object has been forgotten, or assist in personalizing infotainment, HVAC, or other in-cabin capabilities.

Synthetic data simulation & ground truth generation for the DMS

SKY ENGINE AI Synthetic Data Cloud offers efficient solution for simulating data in several modalities including ground truth generation of any kind as yet outlined, so that the computer vision developers can quickly build their data stack and seamlessly train the AI models covering numerous situations and corner cases in the dataset. The parameters that can be modified within the scene created in the SKY ENGINE AI cloud include following:

- Rendered image resolution and ray tracing quality.

- Scene textures resolution (full, reduced for space and time optimization).

- Scene textures parameters (car interior materials, patterns, colors).

- Outside environmental maps type.

- Environmental light intensity.

- IR point lights strength, angle and direction.

- Lens parameters: type (pinhole, fisheye, anamorphic), focal lengths, principal point, distortion coefficients (radial, tangential).

- Modality selector (visible light, near infrared).

- Camera position and orientation (like rear view mirror, console, etc.).

- Camera randomization ranges (X, Y, Z, roll, pitch, yaw).

- Shaders’ parameters (clearcoat level, sheen level, subsurface scattering level, light iterations, antialiasing level, and more).

- Post Processing (tone mapping, AI denoising, blur / motion blur, light glow).

- Occupancy probabilities on each seat (adults, children on child seats, empty child seats, big items, piles of items, empty seats). For items, also between seats.

- Driver and occupant action probabilities (drinking, eating, smoking, driving, idle, looking around, grabbing an object from another seat etc.).

- Seatbelt status (fastened/unfastened) probabilities.

Note: All parameters can be defined as the rules for randomization (range, predefined distribution, custom probabilistic distribution).

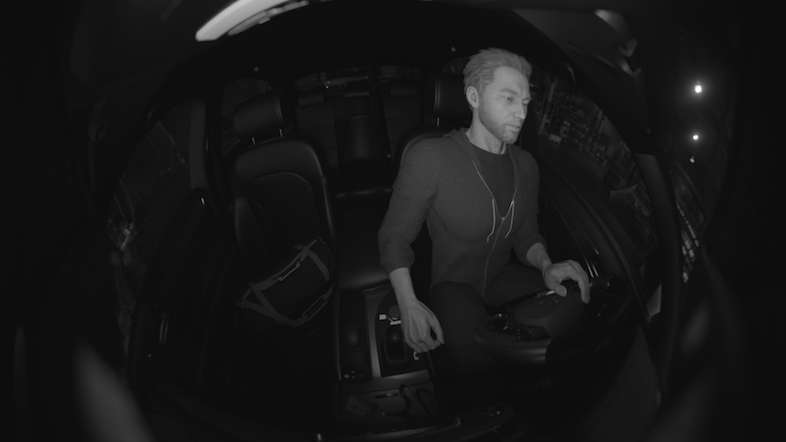

Facial expressions simulations: drowsiness, yawning, smiling, blinking, reaction to dazzling light, looking around, checking mirrors – in-cabin driver monitoring scene at night, car interior lighting on (video).

Universe of applications: insurance, cab, lorry, train, airplane fleet

The SKY ENGINE AI platform can effectively be used to simulate Physically-accurate, photo realistic synthetic data in the RGB and IR modalities enabling accelerated training of the accurate AI models in the driver monitoring applications. These computer vision models, when incorporated in the DMS/OMS system, enable real-time assistance in identifying in-car activities and help recognizing event risks, such as their probability, direct cause, likelihood of potential injuries and severity accumulated over pre-defined period of time. Accidents can be predicted, managed, and, at best, largely prevented. Insurers may be advised of the most likely events, given on-the-road and in-cabin circumstances, with a high level of accuracy. This can assist in discussing policies and applying discounts to the drivers or fleet owners reinforcing strict safety measures, noticing less issues, and recommending additional training assistance. Such system can apply driver scoring for post-accident analysis of the behaviour to further optimize insurance policy and its cost, which can be especially interesting for fleet owners. In the premium offering, drivers can get a monetary value in the form of monthly cash-backs, based on their road safety performance measured by accurate evidence-based risk indicators for insurance companies.

The DMS is expected to keep all the data aboard the vehicle to avoid triggering privacy concerns. However, to dispute any insurance claims it could be employed to work with recording mode on. Fleet owners or insurance companies can get insights on the detailed status of the driver and can alert a dispatch center or the driver to deliver real-time life-saving alerts. Insurers can offer Pay-How-You-Drive (PHYD) policy models to enable accurate evidence-based risk indicators including drivers scoring. Next, the drivers can get a monetary value in the form of monthly cash-backs or policy discounts, based on their road safety performance.

Let us know about your cases and get access to the SKY ENGINE AI platform or get tailored AI models or synthetic datasets for your driver and occupant monitoring applications. As we support much more industries than just an automotive a broad range of data customization is available even for specific sensors and environments.